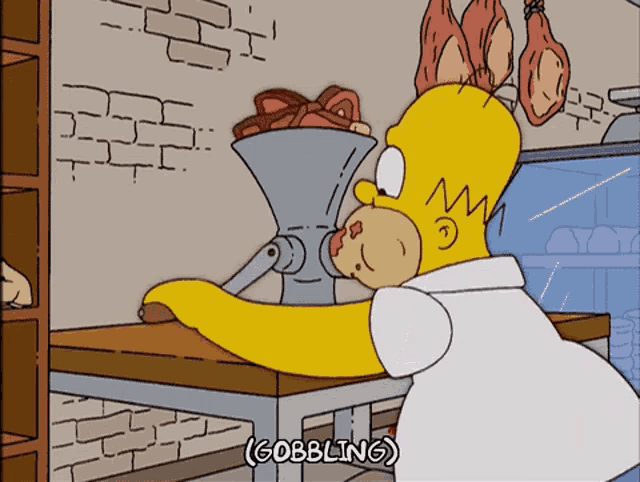

GenAI? How about BarfAI

"A study of GPT-3.5-based ChatGPT's ability to solve 728 coding problems: fairly good at solving problems that existed before 2021, but struggles with newer ones. A reasonable hypothesis for why ChatGPT can do better with algorithm problems before 2021 is that these problems are frequently seen in the training dataset."

https://spectrum.ieee.org/chatgpt-for-coding

The dirty little secret to LLM is it just reshuffles historical data and repackages in a human-readable format by using statistical grammar clustering as opposed to NLP rules. There is nothing Generative about GenAI. We should just rename it Regurgitative AI, or BarfAI for short.

Sausage Grinder AI may offend the vegans. 😁

There are NO DATASETS you can train LLM on to GENERATE new ideas, sequences, patterns, etc. Here's a simple gut check: What dataset can you train ChatGPT 10.0 on so that it can correctly answer these questions:

1) Create me a GenAI startup business plan that will generate more revenue than its training cost?

2) Give me a protein nucleic acid sequence with enzymatic properties that attach to cancerous cells and kill them and no other autonomous cells, and this will work in-vivo in humans.

LLM is not the way to AGI, if that's what folks are expecting/fearing. Unless we discover a time portal to connect to future datasets for LLM training or RAG source.

The reports of humanity's demise is greatly exaggerated.